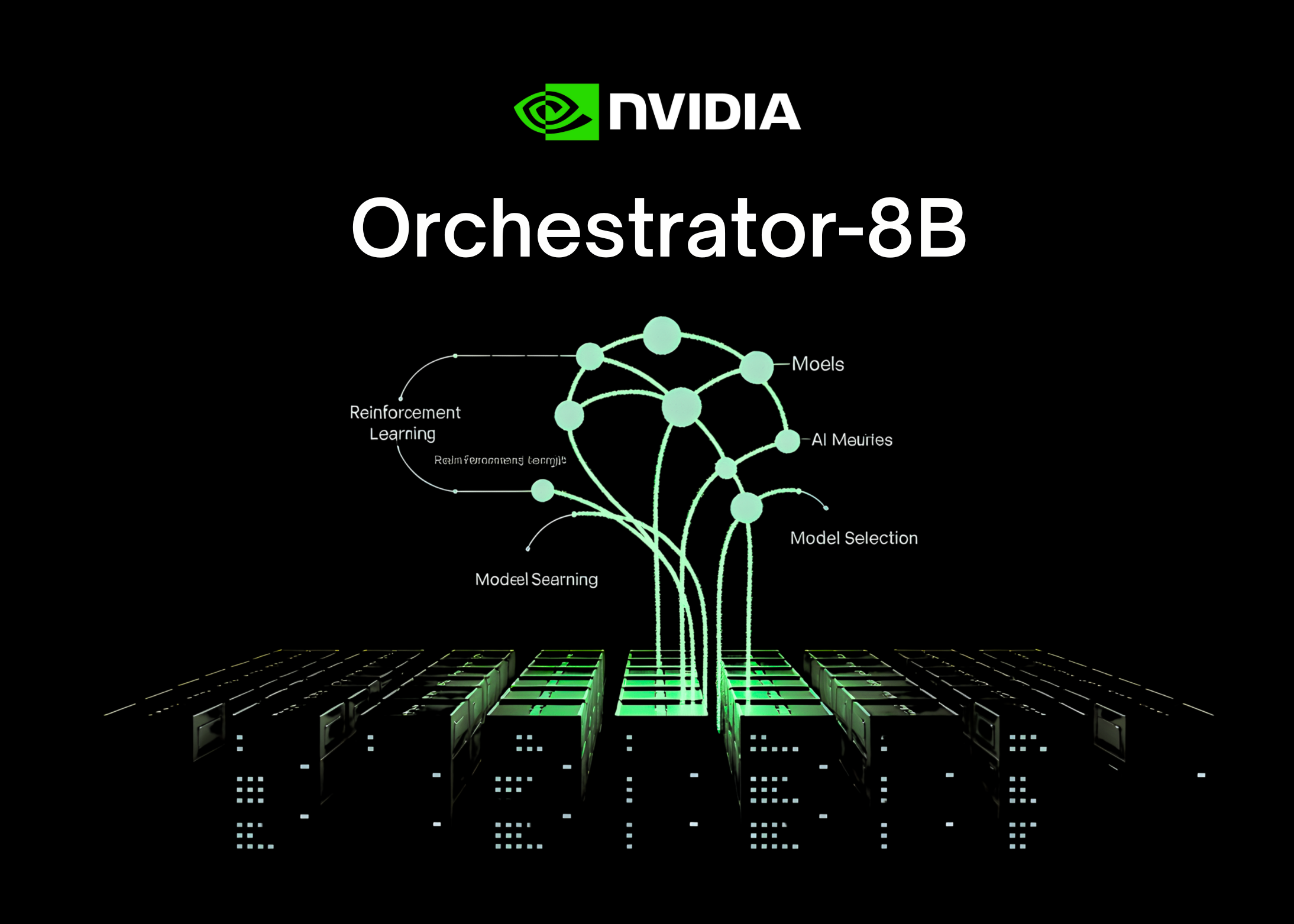

NVIDIA’s Tool Orchestrator: Orchestrator-8B Explained

NVIDIA Research built Orchestrator-8B to handle tool and model selection in AI agents. Instead of one big model doing all the thinking, this 8B-parameter setup acts as a controller. It picks from tools like web search or code interpreters, plus other LLMs, to finish tasks. MarkTechPost covered the release on November 28, 2025, calling it part of the ToolOrchestra method.

Why It Beats Self-Routing

Large models like GPT-5 often stick to themselves or a few strong options, ignoring costs. A pilot study showed Qwen3-8B routing to GPT-5 73% of the time, and GPT-5 picking itself or its mini version 98% of the time. NVIDIA’s approach trains a dedicated small model to spread work across cheaper, faster options without those biases, as detailed in the MarkTechPost article.

How Orchestrator-8B Works

It’s a fine-tuned Qwen3-8B decoder-only Transformer. At runtime, it runs a loop up to 50 turns: read the user query and preferences (like low latency), generate reasoning, pick a tool, and output a JSON call. The tool runs, results feed back, repeat until done.

- Basic tools: Tavily search, Python code sandbox, Faiss index with Qwen3-Embedding-8B.

- Specialist LLMs: Qwen2.5-Math-72B, Qwen2.5-Math-7B, Qwen2.5-Coder-32B.

- General LLMs: GPT-5, GPT-5 mini, Llama 3.3-70B-Instruct, Qwen3-32B.

Training uses ToolOrchestra’s reinforcement learning on full trajectories. Rewards cover task success (GPT-5 judges open answers), costs (API pricing), latency, and user prefs like avoiding certain tools. They apply Group Relative Policy Optimization (GRPO) on synthetic data from 552 problems and 1,296 prompts, per the NVIDIA Technical Blog.

Results on Benchmarks

On Humanity’s Last Exam (text questions), it hits 37.1% accuracy vs. GPT-5’s 35.1%. FRAMES: 76.3% vs. 74.0%. τ² Bench: 80.2% vs. 77.7%. Average cost drops to 9.2 cents per query vs. 30.2 cents for GPT-5, latency to 8.2 minutes vs. 19.8 minutes. It generalizes to new models too and follows prefs better. NVIDIA notes it wins even at turn limits like 10 or 20.

Availability and Next Steps

Orchestrator-8B is out as open weights on Hugging Face. To train your own, pick a base like Qwen3-8B or Nemotron Nano, generate synthetic tasks with their code sketch (subjects, schema, database, tools, tasks), then run ToolOrchestra training. Code is released too. Related NVIDIA Technical Blogs: Building an Interactive AI Agent for Lightning-Fast Machine Learning Tasks and Build Efficient Financial Data Workflows with AI Model Distillation.