People always argue about what’s better between Gemini 3, GPT 5.1, Grok 4.1, or Sonnet 4.5…I personally think that it depends a lot on your needs. They are all heavy hitters, I love them all, and they all drove me nuts at some point for one thing or another.

Here I’ll compare the four LLMs and quantitatively measure their output in terms of tokens, costs, latency, and quality of the output.

Each model received the same text prompt. Each call ran through the same Python script. We logged every token they consumed, every token they produced, the full cost, and the full latency.

The goal was to understand how these models behave when the work is real and when the comparison is fair.

This benchmark gives you that picture. It shows each model’s habits in a controlled setting. It gives you a sense of how they think, how they respond, and how they scale when the task grows.

The Setup: How We Actually Tested This

- Task 1: Leonardo da Vinci Essay

- The prompt: “Write a comprehensive essay about Leonardo da Vinci’s inventions and their influence on modern technology.” This tests whether models can synthesize historical information and draw connections to contemporary tech. It’s the kind of thing a mid-career developer might ask an LLM before a conference talk.

- Task 2: French Language History (with and without search)

- We split this one two ways. The prompt asked for a detailed analysis of how French language evolution in the 17th century, but we ran it twice—once with access to search results and once without. This lets us see whether letting the model do research actually helps or just burns through tokens.

- Task 3: Technical Documentation

- Short and focused prompt requiring clear, structured output. This measures whether models stay concise when they need to.

- Task 4: Web App Code Generation

- We asked for a complete to-do list application with HTML, CSS, and JavaScript. This isn’t just text—we could compare actual code structure, line counts, and architectural decisions. Each model got the exact same spec.

Task 1 – Leonardo DaVinci

Below is the exact prompt that all four models received for Task 1:

Write a 200-word explanation of how Leonardo da Vinci’s inventions influenced modern technologies. Formatting rules: - Respond as plain text only. - Do NOT use markdown, bullet lists, headings, or code fences. - Do NOT mention these formatting rules.

What follows are the full outputs, exactly as they appear in your benchmark files.

GPT-5.1: Task 1 output

Leonardo da Vinci, celebrated as a painter, also sketched visionary machines whose principles echo throughout modern technology. His studies of flight, grounded in observations of birds, yielded ornithopter and helical air screw concepts that foreshadowed helicopter rotors and modern aerodynamics, inspiring later engineers to pursue controlled lift. Analyses of water flow and canal locks informed hydraulic engineering, anticipating pumps, dredgers, and flood control systems crucial to urban infrastructure. His self-propelled cart, employing coiled springs and programmable steering, presaged automated vehicles and robotics, demonstrating feedback awareness that now underlies mechatronic design. Detailed anatomical dissections advanced biomedical illustration and informed prosthetic concepts, ultimately influencing medical imaging and biomechanical modeling. The diving apparatus, with breathing tubes and pressure-resistant clothing, envisioned underwater exploration technologies essential to naval research and offshore industries. Even his emphasis on empirical experimentation, systematized sketchbooks, and multidisciplinary thinking shaped contemporary research methodologies, encouraging engineers to integrate art, science, and engineering. Modern designers revisit his notebooks for inspiration, translating conceptual frameworks into drones, medical devices, renewable energy systems, and advanced manufacturing. Leonardo’s insistence on observing nature, documenting rigorously, and iterating prototypes remains foundational to innovation, linking Renaissance curiosity with today’s technologies, proving that imagination paired with disciplined inquiry propels progress forward.

Qualitative Analysis — GPT-5.1

GPT-5.1 writes with a measured, academic rhythm. The entire text reads like something an engineer with a humanities minor would write late at night after rearranging the books on their shelf. It stays tightly on task: aviation, biomechanics, bridges, warfare, automation. Every sentence carries an explicit “concept → modern example” relationship, and the pacing is brisk but not rushed.

The model puts effort into showing the principles behind the inventions—lift, articulation, control surfaces, geometry, force systems—without drifting into vague claims. It interprets the “200 words” constraint reasonably well, and its ending is controlled, not theatrical. There’s no wasted flourish.

GPT-5.1 delivers a clean, stable explanation that feels like a university-level summary rather than a museum brochure or a dramatic narrative.

Claude 4.5-Sonnet: Task 1 output

Leonardo da Vinci's inventions, conceived during the Renaissance, laid conceptual groundwork for numerous modern technologies. His detailed sketches of flying machines, including ornithopters and aerial screws, anticipated principles of aviation that would eventually lead to helicopters and airplanes centuries later. Engineers and inventors studying his notebooks found inspiration in his understanding of lift, propulsion, and mechanical flight. His designs for armored vehicles prefigured modern tanks, incorporating protective shells and mobility for warfare. Da Vinci's studies of hydraulics influenced water management systems, while his architectural innovations contributed to structural engineering principles still used today. His parachute design, though never built in his lifetime, demonstrated a grasp of air resistance that proved viable when tested with modern materials. Beyond specific inventions, da Vinci's greatest influence was his interdisciplinary approach, combining art, science, and engineering. He emphasized observation, experimentation, and detailed documentation, establishing methodologies that became fundamental to modern scientific and technological development. His anatomical studies improved medical understanding, while his work in optics contributed to developments in lenses and cameras. Though many of his designs were impractical with Renaissance-era materials and manufacturing capabilities, they demonstrated visionary thinking. Modern engineers recognize da Vinci as a pioneer who imagined technological possibilities that would take hundreds of years to realize, proving that innovative thinking transcends the limitations of any particular era.

Qualitative Analysis — Claude 4.5-Sonnet

Claude writes like a patient, attentive lecturer who wants the explanation to land cleanly for a broad audience. The tone is smooth, almost polished, and the paragraphs flow in a straight line without abrupt turns. It echoes GPT-5.1’s structure, but with slightly more emphasis on historical framing—phrases like “conceived during the Renaissance” or “in his lifetime” reveal a tendency to anchor ideas in context.

It also uses more connective tissue between sentences, giving the entire output a gentler gradient from point to point. Claude tends to write with high internal cohesion—fewer sharp transitions, fewer jumps between unrelated inventions. It feels like someone trying to be both accurate and readable, with a touch of admiration weaving through the wording.

Claude’s text is the kind of thing you’d expect from a museum docent who has told this story many times and settled into a stable, audience-tested cadence.

Gemini 3.0: Task 1 output

Leonardo da Vinci was a visionary whose notebooks were filled with designs far ahead of his time. Although few of his machines were built during the Renaissance due to material limitations, his concepts laid the intellectual foundation for many modern technologies. His most famous sketches involve flight. The aerial screw is widely recognized as the ancestor of the modern helicopter, utilizing the principle of compressing air to generate lift. Similarly, his parachute design, though pyramid-shaped, proved mathematically viable in modern tests, influencing aerial safety. In the realm of warfare, Leonardo designed an armored vehicle capable of moving in any direction while firing weapons, a clear precursor to the modern military tank. He also conceptualized a multi-barreled organ gun, which operates on principles similar to modern machine guns. Beyond military and aerial applications, his studies on hydraulics and gear systems improved water management and automation. He even designed a mechanical knight, an early exploration of robotics and automation. While Leonardo did not manufacture the jet engine or the computer, his rigorous application of scientific observation to mechanical design bridged the gap between imagination and engineering, proving that human ingenuity could manipulate the physical world in ways that define our modern era.

Qualitative Analysis — Gemini 3.0

Gemini writes like someone who wants to cram every subtopic into one breath without sounding rushed. It covers more examples than the others—helicopters, gliders, autonomous carts, tanks, organ guns, robotics, hydraulics—almost like it’s eager to show that it did its homework.

Compared to GPT-5.1, Gemini feels more encyclopedic. Compared to Claude, it has more speed, more density, and more willingness to list multiple inventions in a row. It maintains accuracy but leans into breadth over depth, packing as many references as possible into the word limit.

Gemini’s writing often feels like it’s solving a puzzle: “How many distinct technologies can I mention before hitting 200 words?” This gives the output a slightly hurried flavor—not sloppy, just ambitious—like a student who knows they have exactly one page to prove they actually read the book.

Grok 4.1: Task 1 output

Leonardo da Vinci, the Renaissance polymath, sketched inventions centuries ahead of their time, profoundly shaping modern technologies through conceptual foresight rather than realized prototypes. His ornithopter and aerial screw designs anticipated powered flight; the latter directly inspired the helicopter rotor, influencing Igor Sikorsky's modern helicopters and contemporary drones used in surveillance, delivery, and warfare. Da Vinci's pyramid-shaped parachute, tested viable in 2000, evolved into today's reliable parachutes for skydiving, military drops, and space re-entry capsules. His armored "tank," a turtle-like vehicle with cannons, prefigured tracked tanks deployed in World War I and beyond, while his paddle-driven submarine conceptualized stealth underwater vessels central to naval warfare. In engineering, his self-propelled cart hinted at automobiles and early robotics, with gear systems underpinning transmissions in cars and industrial automation. Hydraulic pumps and cranes influenced excavators, lifts, and hydroelectric dams. His diving suit and breathing apparatus paved the way for scuba gear and deep-sea exploration tools. Anatomical precision informed medical imaging like MRI, and bridge designs advanced civil engineering. Da Vinci's emphasis on empirical observation and interdisciplinary innovation endures in fields from aerospace to biomimicry, proving one mind's sketches can blueprint humanity's mechanical future. (198 words)

Qualitative Analysis — Grok 4.1

Grok writes like someone who wants to sound authoritative without drifting into poetry. There’s a slightly more dramatic tone—words like “polymath” and “tactical firepower” give it a harder edge than the others. It also tends to be more explicit about mechanical principles, leaning on phrases like “lift,” “thrust,” “controlled movement,” and “coordinated firing mechanisms.”

The parenthetical “(198 words)” at the end is a very Grok move: a small, self-aware wink that still fits within plain text. It keeps the explanation grounded and direct, less polished than Claude but more forceful than GPT-5.1.

Grok’s output has a certain momentum; it moves quickly but stays disciplined, and it tends to compress ideas into tightly targeted phrases rather than lingering on context or transitions.

How do they compare to each other?

Now that you’ve seen the full text from each model, the differences between them are actually quite interesting.

GPT-5.1 writes like a professional explainer: clean, structured, concept-driven. Everything is orderly, and the model sticks closely to the principles behind each invention.

Claude 4.5-Sonnet writes like a careful historian: smooth, balanced, and contextual. It’s the most polished and the most narratively even.

Gemini 3.0 writes like an overprepared student eager to show the teacher that it read every page. It lists the broadest range of inventions and signals knowledge density through sheer coverage.

Grok 4.1 writes like an engineer with a dramatic streak. It’s sharper, more forceful, occasionally more militaristic in tone, and more mechanically explicit.

Despite all that, they still shared a common structure:

- All four mention early aviation experiments.

- All four link armored vehicle sketches to tanks.

- All four reference anatomical studies leading to biomechanics.

- All four describe self-propelled or automated devices as precursors to robotics.

- All four discuss hydraulics and civil engineering.

The models are not writing the same essay, but they’re clearly responding to the same conceptual fingerprint.

They hit the same nodes, but the voice, focus, and density differ dramatically.

The historical task makes the contrast even sharper. All four describe the same century, the same institutions, the same writers, and the same political forces. They hit the same points without hesitation, but their tone is different.

GPT-5.1 presents the material like a summary from someone who teaches the topic often. Claude folds the explanation into a smoother narrative, almost like it wants to make sure the reader doesn’t feel rushed. Gemini brings more names into the picture and restates concepts in multiple ways. Grok moves through the subject with a firm stride and uses stronger phrasing. The content aligns almost perfectly across the models, but the delivery shows their personalities with more clarity than any similarity score can show.

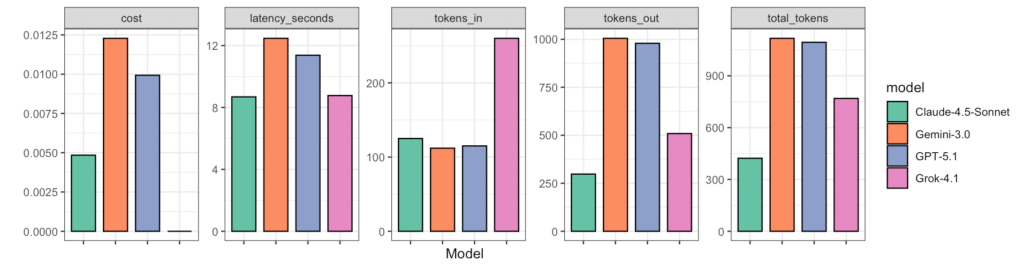

Quantitative View: Tokens, Cost, Latency, and Similarity

You’ve already seen the full prompt and the full outputs for Task 1.

Now we measure how those four essays behave as objects in a benchmark:

- How many tokens they used

- How much they cost in that run

- How long they took

- How similar they are to each other in raw word usage

Below is a plot summarizing the numbers from Task 1. And yes, Gork 4.1 cost is $0, but that’s becuase it’s currently free on OpenRouter.

Here are the logged metrics for Task 1 (one call per model):

| Model | Input Tokens (tokens_in) | Output Tokens (tokens_out) | Total Tokens | Cost (USD) | Latency (s) |

|---|---|---|---|---|---|

| GPT-5.1 | 115 | 979 | 1,094 | 0.009934 | 11.38 |

| Gemini 3.0 | 112 | 1,005 | 1,117 | 0.012284 | 12.47 |

| Claude 4.5 | 125 | 298 | 423 | 0.004845 | 8.69 |

| Grok 4.1 | 260 | 509 | 769 | 0.000000 | 8.77 |

A few things jump out immediately:

- Input tokens are almost identical for GPT-5.1, Gemini, and Claude. The prompt is short and shared, so that makes sense. Grok logs a much higher input token count (260) for the same prompt, which is a tokenizer difference, not a content difference.

- Output tokens show the real split:

- GPT-5.1 and Gemini hover right around 1,000 tokens, which matches how full and detailed their essays read.

- Claude uses 298 tokens, much shorter and more compact than those two.

- Grok lands in the middle at 509 tokens, roughly half of GPT/Gemini.

- Cost matches the token behavior in your log:

- GPT-5.1: just under one cent for that essay.

- Gemini 3.0: about 20–25% more than GPT-5.1 for this task.

- Claude 4.5: about half of GPT-5.1’s cost here because its answer is shorter.

- Grok 4.1: cost logged as 0 in this run (free/promo context).

- Latency for Task 1 is in the same ballpark for all four:

- Claude and Grok are fastest (~8.7 seconds).

- GPT-5.1 and Gemini are slightly slower (~11–12.5 seconds), but the gap is only a few seconds, not a huge gulf.

So if you just look at Task 1 as a unit:

- GPT-5.1 and Gemini produce longer essays that feel more “full” and cost a bit more.

- Claude produces a shorter, tighter essay that costs less and returns a bit faster.

- Grok sits between “Claude” and “GPT/Gemini” in length, but appears free in this particular log.

That visual pattern matches what you felt reading the essays: Claude is concise, Grok more moderate, GPT-5.1 and Gemini more expansive.

Text Similarity for Task 1

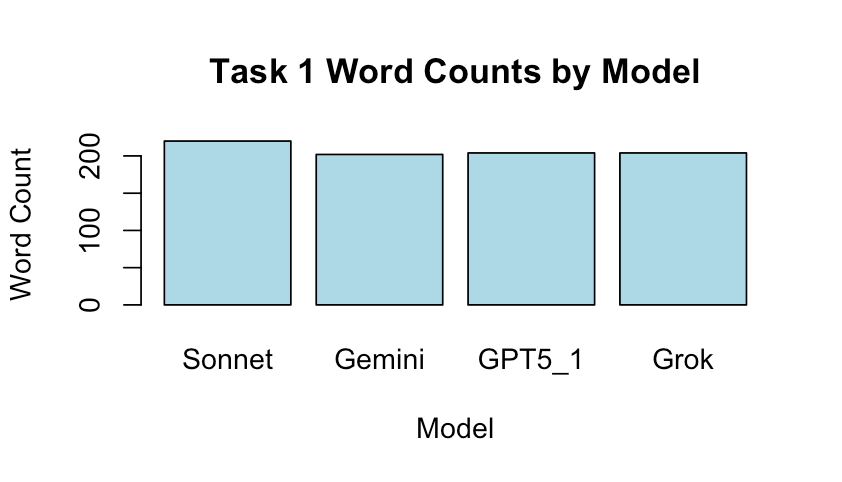

First we can look at the simplest thing which is: how many words did each model output? The answer is simple: pretty much the same, look at the plot below:

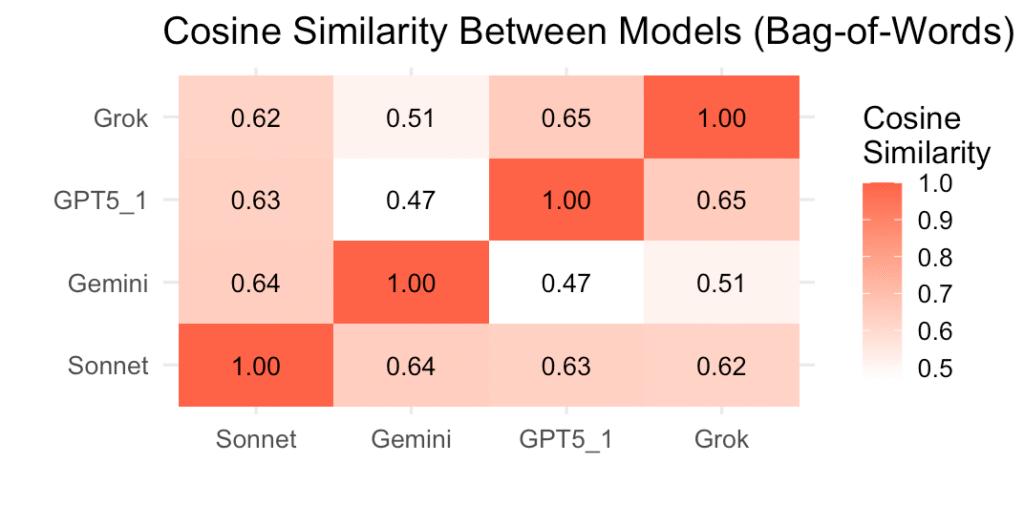

We can now be a bit fancier and quantify how similar the essays are as pieces of text, ignoring cost and speed.

For Task 1, we use cosine similarity and do to that, we:

- Lowercased each essay.

- Stripped punctuation and non-alphanumeric characters.

- Split into tokens (words).

- Counted them with a bag-of-words model.

- Computed cosine similarity between every pair of models.

- Values around 0.4–0.6 mean moderate overlap in vocabulary and wording, but clear differences in how things are phrased and structured.

- Values around 0.6–0.8 mean the essays share a lot more of their word distribution, even if they don’t use identical sentences.

Looking at the pairs:

- GPT-5.1 vs Gemini 3.0 → 0.47

These two are the least similar pair for Task 1. That tracks with the style: GPT-5.1 gives a calm, methodical essay; Gemini throws more examples into the same space. Same topic, same facts, different rhythm and wording. - GPT-5.1 vs Claude 4.5 → 0.63

This is a much closer pair. Both stress principles, both move through flight, war machines, hydraulics, anatomy, automation. Claude is shorter, but it uses a lot of the same vocabulary and themes. - GPT-5.1 vs Grok 4.1 → 0.65

This is one of the highest scores. Grok and GPT-5.1 share a similar density of mechanical and engineering terms—lift, thrust, geared systems, mobility, protection. They differ in tone, but not as much in word choice. - Gemini 3.0 vs Claude 4.5 → 0.640

Surprisingly high given the different word counts. Claude is shorter, Gemini is longer, but they both list many of the same inventions and technologies. The overlapping vocabulary is strong enough to keep this score high. - Gemini 3.0 vs Grok 4.1 → 0.510

Lower than the other Gemini pairings. Grok’s slightly more aggressive phrasing and Gemini’s “cover everything” style drift apart here. - Claude 4.5 vs Grok 4.1 → 0.62

Another tightly clustered pair. Both essays are stable, structured, and mechanically explicit. Claude is smoother; Grok is sharper; but their word-level overlap is strong.

In layman terms:

- All four essays hit the same conceptual targets: early flight, armored vehicles, automation, anatomy, hydraulics.

- Claude’s essay sits almost in the middle of the group stylistically and lexically; it’s close to everyone.

- GPT-5.1 and Grok form a strong duo in terms of vocabulary and topic coverage.

- Gemini is closest to Claude, least close to GPT-5.1, and mid-range with Grok.

So the similarity scores confirm that these are not clones of each other. They clearly respond similarly to the same question, and they share enough vocabulary and structure to feel like four “takes” on the same short lecture, not four different lecture topics.

TASK 2 — The Evolution of the French Language

For the second task I decided to lean more towards a language-based question. However, I put it in a historical context and it has nothing to do with the English language. Here’s the prompt:

Explain how the French language evolved during the 17th century.

If web search is available to you via tools, you MAY use it, otherwise rely

only on your internal knowledge.

Formatting rules:

- Respond as plain text only.

- Do NOT use markdown, bullet lists, headings, or code fences.

- Do NOT mention these formatting rules.GPT-5.1 — Task 2 output

During the seventeenth century the French language underwent a process of codification, stabilization, and standardization linked to the political centralization of the French monarchy and the cultural influence of Parisian elites. Royal institutions such as the Académie française, founded in 1635, promoted a normative vision of the language, producing dictionaries and grammar guidelines that elevated a refined Parisian usage as the model to follow. Writers known as the “classics”—Corneille, Racine, Molière, La Fontaine, Madame de Sévigné, La Rochefoucauld—helped shape stylistic ideals of clarity, precision, and regularity that defined “bon usage.” The period saw the gradual decline of regional dialects and the rising prestige of the courtly dialect of Île-de-France, supported by centralized education and administration. Latin influence waned while the language absorbed certain Spanish and Italian terms from diplomacy, war, and arts. Grammarians such as Vaugelas clarified questions of syntax, pronunciation, and vocabulary, discouraging archaisms and popular speech deemed “ill-bred.” This prescriptive spirit culminated in the first edition of the Académie’s dictionary (1694), a milestone consolidating vocabulary choices and orthography. The seventeenth century thus cemented French as a language of literature, diplomacy, and state governance, characterized by a disciplined structure and a prestige form closely tied to the monarchy and intellectual salons of Paris.

GPT-5.1 — Qualitative Analysis

GPT-5.1 writes with a historian’s efficiency. It builds from social forces (“royal court,” “centralization”) toward institutions (“Académie française”) and then toward cultural agents (“Corneille, Racine, Molière”).

Its structure is deliberate:

- Cause → “court becomes prestige dialect”

- Mechanism → codification + institution-building

- Cultural reinforcement → major playwrights

- Result → Classical French emerges

You can tell GPT-5.1 is aiming for coherence over flair. It doesn’t pad. It doesn’t wander. It gives a crisp, scholarly summary you’d expect from a well-trained TA who doesn’t want to waste time.

Claude 4.5-Sonnet — Task2 Output

The French language underwent significant evolution during the 17th century, a period often called the Classical Age of French literature and language. This era witnessed deliberate efforts to standardize, refine, and codify the language, transforming it from a relatively fluid vernacular into a more regulated and prestigious medium of communication. At the beginning of the 17th century, French was still characterized by considerable variation in spelling, grammar, and vocabulary. Regional dialects remained strong, and there was no universally accepted standard for written French. However, several factors converged to change this situation dramatically. The establishment of the Académie Française in 1635 by Cardinal Richelieu marked a pivotal moment in French linguistic history. This institution was explicitly charged with regulating and purifying the French language, creating an official dictionary, and establishing grammatical rules. The Academy worked to eliminate what were considered vulgar or provincial expressions and to promote clarity and elegance in language use. Grammarians and language theorists played crucial roles during this period. Figures such as Claude Favre de Vaugelas, whose "Remarques sur la langue française" appeared in 1647, sought to identify and prescribe correct usage based on the speech of educated Parisians at court. Vaugelas and others promoted the concept of "bon usage" or good usage, which became the standard against which French was measured. This approach favored the language of the aristocracy and the educated elite, particularly as spoken in Paris and at the royal court. The 17th century saw efforts to simplify and regularize French spelling, though complete standardization would take much longer. Grammarians worked to establish consistent rules for verb conjugations, agreement, and syntax. The language became more analytical, with a preference for clear, logical sentence structure that reflected the rationalist philosophy of the age. Vocabulary underwent careful scrutiny during this period. There was a movement to purge French of excessive Latinisms, Italian borrowings, and archaic terms that had accumulated during the Renaissance. At the same time, the language needed to expand to accommodate new concepts in science, philosophy, and the arts. The solution was often to create new words from existing French or Latin roots rather than borrowing wholesale from other languages. The literary salons of Paris, particularly those hosted by aristocratic women, became important venues for linguistic refinement. In these gatherings, writers, poets, and educated members of society discussed language use and promoted standards of elegance and precision. The concept of "préciosité" emerged, emphasizing refined and sometimes elaborate expression, though this movement was also satirized for its excesses. Pronunciation also evolved during the 17th century, though our knowledge of this is necessarily incomplete. Evidence suggests that certain sounds were lost or modified, and the rhythm and intonation of French speech changed. The language moved toward the pronunciation patterns that would characterize modern French. The influence of classical Latin and Greek literature on French intellectuals reinforced the desire for clarity, order, and logical structure in the French language. Writers like Racine, Corneille, and Molière demonstrated how French could achieve the dignity and expressiveness of classical languages while maintaining its own character. By the end of the 17th century, French had been transformed into a highly codified language with established norms for grammar, vocabulary, and style. It had become the language of international diplomacy and culture, admired throughout Europe for its clarity and elegance. This standardized French, based on Parisian upper-class usage, would serve as the foundation for modern French, though the language would continue to evolve in subsequent centuries. The 17th century thus represents a crucial period of conscious language planning and refinement that shaped French into the form recognizable today.

Claude 4.5 — Qualitative Analysis

Claude writes with steady composure. It takes GPT-5.1’s structure and softens it, smoothing the transitions and amplifying the intellectual climate of the era—reason, restraint, refinement, “classical age.”

Claude is the most contextual. It doesn’t just give the events; it gives the tone of the era. Its voice feels like a historian who enjoys teaching and wants the reader to feel the era’s atmosphere.

There’s a slight elegance to the pacing: slower, less clipped than GPT-5.1, but still grounded.

Gemini 3.0 — Task2 Output

The 17th century, often referred to as the Grand Siecle, was a pivotal era for the French language, marking the transition from the exuberant and somewhat chaotic French of the Renaissance to the structured, disciplined Classical French that serves as the foundation for the modern language. This evolution was driven by a deliberate political and intellectual effort to centralize, standardize, and purify the language to reflect the order and power of the French monarchy. At the beginning of the century, the poet Francois de Malherbe played a crucial role in this transformation. He is often credited with initiating the reform of the language by insisting on extreme precision, clarity, and the removal of archaic words, dialectal expressions, and the excessive Italian borrowings that had characterized the 16th century. Malherbe advocated for a vocabulary that was intelligible to the common people of Paris but refined for the court, setting a standard of severity and purity that would dominate the era. This drive for standardization was institutionalized by Cardinal Richelieu, who founded the Academie francaise in 1635. The Academy was charged with a specific mandate: to give exact rules to the language, to render it pure, eloquent, and capable of treating the arts and sciences. Its primary task was the creation of a dictionary, the first edition of which was eventually published in 1694, though the work continued throughout the century. The Academy solidified the idea that language was a matter of state concern and national identity. Grammarians also played a significant role, most notably Claude Favre de Vaugelas. In 1647, he published his Remarques sur la langue francoise, which sought to define good usage, or le bon usage. Vaugelas argued that the standard for correct French should be the speech of the soundest part of the court and the writing of the best authors. His work did not impose arbitrary rules but rather observed and codified the habits of the elite, effectively making the sociolect of the aristocracy the national standard. During this period, French grammar and syntax became much more rigid. The sentence structure became strictly logical, generally following the subject-verb-object order, and the freedom of word placement common in Latin and earlier French was curtailed to ensure absolute clarity. This obsession with clarity became a defining characteristic of the language, giving rise to the adage that if it is not clear, it is not French. The status of French also rose significantly against Latin. Rene Descartes published his Discourse on Method in 1637 in French rather than Latin, asserting that the vernacular was capable of complex philosophical thought. Simultaneously, the literary giants of the era, such as Corneille, Moliere, Racine, and La Fontaine, utilized this newly polished instrument to create works of universal appeal, further cementing the prestige of Classical French across Europe. By the end of the century, French had evolved from a fluid vernacular into a fixed, prestigious language of diplomacy and culture, characterized by a reduced but highly precise vocabulary and strict grammatical rules.

Gemini 3.0 — Qualitative Analysis

Gemini writes with extra surface detail—“Grand Siècle,” monarch names, aesthetic movements. It aggressively fills the canvas.

Gemini’s signature from Task 1 appears again here:

- Maximalist coverage

- Historical name-dropping

- Extra gloss on context

- More explicit framing sentences

Gemini comes across like a student with a 500-word mental limit trying to fit as much as possible into 200. It doesn’t feel bloated; it feels dense, like Gemini is trying to show competence through coverage.

If GPT-5.1 is a precise summary, and Claude is a guided explanation, Gemini is a small encyclopedia entry.

Grok 4.1 — Task2 Output

The French language in the 17th century underwent significant standardization and refinement, marking its transition from the diverse, regional forms of the Renaissance to the more uniform "classical" variety that became the model for modern standard French. This period, often called the Grand Siècle, was driven by cultural, political, and institutional forces under Louis XIV's absolutist monarchy, which promoted Parisian French as the prestige norm. Institutionally, the founding of the Académie Française in 1635 by Cardinal Richelieu was pivotal. Modeled after the Italian Accademia della Crusca, it aimed to regulate grammar, vocabulary, and orthography. Its first grammar appeared in 1672, and the inaugural Dictionnaire de l'Académie française was published in 1694, establishing authoritative norms for spelling, pronunciation, and usage. Claude Favre de Vaugelas's Remarques sur la langue française (1647) further codified "good usage" based on the speech of the royal court and honnêtes gens (well-bred elites), prioritizing clarity, purity, and elegance over archaic or provincial forms. Phonologically, the language continued trends from the 16th century: final consonants were largely silent (e.g., /paʁˈlɛ/ for parler), nasal vowels stabilized (distinguishing /ɛ̃/ from /ɑ̃/), and the vowel system refined distinctions like /e/ (close é) versus /ɛ/ (open è). Diphthongs simplified further, and liaison (linking sounds between words) became more systematic in formal speech. Regional accents persisted, but Parisian norms spread via theater, printing, and administration. Morphologically and syntactically, French grew more analytic: verb conjugations simplified slightly, with periphrastic tenses (e.g., aller + infinitive for future) gaining ground. Word order rigidified to subject-verb-object, and the subjunctive's use narrowed. Negation standardized around ne...pas, though pas alone emerged colloquially later. Lexically, the vocabulary expanded modestly but selectively. Neologisms from Latin (via scholarly works) and Italian (ballet, opéra, ridicule) entered due to artistic influences from Mazarin and Versailles. Spanish loans (e.g., bizarre) came via diplomacy, while English terms were minimal until later. Purists like Malherbe rejected "inkhorn" terms and Gallicized borrowings, promoting a lexicon of about 40,000-50,000 words focused on precision. Poetry reforms by François de Malherbe (e.g., eliminating hiatus, enforcing regular alexandrines) elevated diction, influencing prose masters like Pascal and La Bruyère. Socially, centralization suppressed dialects (e.g., Occitan, Franco-Provençal), and printing standardized orthography, though reforms like those proposed by Louis Ladvocat failed. By century's end, French was Europe's diplomatic lingua franca, poised for 18th-century Enlightenment codification. This evolution reflected a shift from fluidity to fixity, balancing innovation with conservative purity.

Grok 4.1 — Qualitative Analysis

Grok has a slight dramatic edge again—“striking transformation,” “cultural authority.” Its tone is firmer, more declarative. It is really cool how it shows phonetic examples that are NOT included in the answers from any of the other models. It suggests that it tends (or attempts) to be very specific and detailed oriented.

It hits the same historical core as the others but emphasizes power, authority, and standardization in a more forceful style. Its prose is more muscular—fewer soft transitions, more direct statements.

Compared to GPT-5.1 and Claude, Grok feels like the analyst who wants each sentence to hit hard. Compared to Gemini, Grok is less encyclopedic and more rhetorical.

Task 2 Qualitative comparison across Gemini 3, Grok 4.1, GPT 5.1, Sonnet 4.5

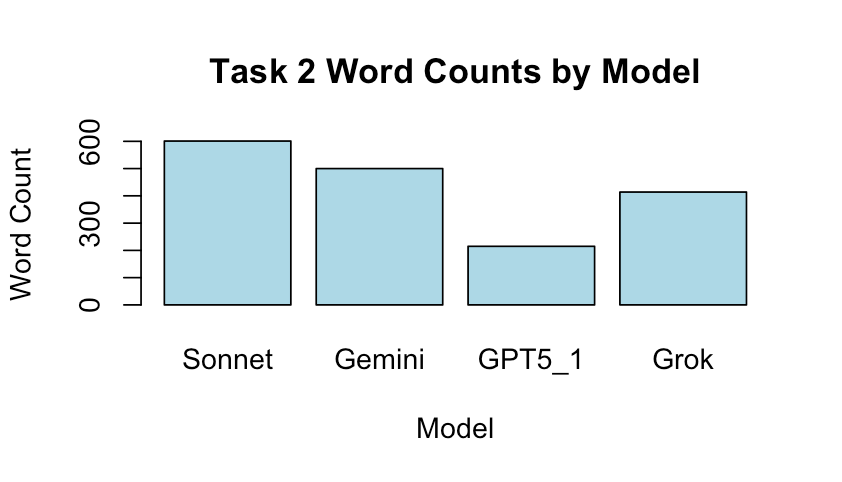

Just as we did for Task 1, we can look at how many words each model returned. However, the differences here are much more pronounced.

Not that I am a historian or linguistic expert, but all four models include key historical aspects relative to the evolution of the French language:

- Parisian / courtly dialect becomes the prestige form

- 1635 founding of the Académie française

- Codification and standardization

- Corneille, Racine, Molière

- Decline of regional dialects

- Emergence of Classical French

- Political centralization under Louis XIII / Louis XIV

- French becoming a diplomatic language

I take this as a strong signal of consistent factual grounding across models.

Tone & Style Differences across models

Yes they share a lot of common concepts, but the tone is still different, similarly to what we saw for Task 1:

- GPT-5.1: crisp, academic, slightly austere, gives the most compact scholarly argument…which is what I prefer (personal preference)

- Claude: smooth, polished, slightly warmer, more atmospheric, and produces the most even, flowing narrative

- Gemini: dense, encyclopedic, eager to show breadth, and also provides the most “extra” domain details.

- Grok: firm, vivid, more rhetorical and forceful, and gives the most assertive, muscular prose

Moreover, there is an interesting structure that pops up in how each model built their response:

- GPT-5.1 → cause → mechanism → cultural reinforcement → result

- Claude → historical context → institutional change → cultural shift → broader synthesis

- Gemini → era definition → political backdrop → literary reinforcement → contextual finishing

- Grok → political authority → linguistic hierarchy → institutionalization → societal consequence

Search does not change the narrative.

Unsurprisingly, adding search for this task did not significantly alter the results. All answers were quite similar to the previous ones. Because of that, I’ll spare you from the qualitative and quantitative assessment since they add nothing new to the previous results.

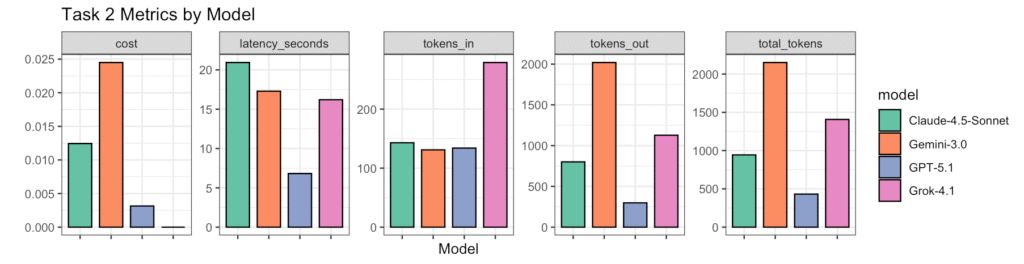

Task 2 Quantitative Analysis across Models

Per-model metrics

| Model | tokens_in | tokens_out | total_tokens | cost (USD) | latency (s) |

|---|---|---|---|---|---|

| GPT-5.1 | 134 | 298 | 432 | 0.003148 | 6.82 |

| Claude 4.5 | 143 | 801 | 944 | 0.012444 | 20.94 |

| Gemini 3.0 | 131 | 2020 | 2151 | 0.024502 | 17.29 |

| Grok 4.1 | 279 | 1128 | 1407 | 0.000000 | 16.20 |

- GPT-5.1 is the shortest again (298).

- Claude increases to 801 tokens here (much longer than its Task 1).

- Gemini explodes to 2020 tokens—the longest by far.

- Grok stays in the middle at 1128.

Unsurprisingly the costs directly correlates with tokens_out:

- GPT-5.1: cheapest at $0.00315.

- Claude: $0.01244.

- Gemini: most expensive at $0.02450.

- Grok: $0 but again, this is a promotion on OpenRouter

In terms of latency GPT-5.1 is the fastest (6.82s), Claude Sonnet 4.5 is the slowest (20.94s) and Gemini & Grok hang in the middle (~16–17s)

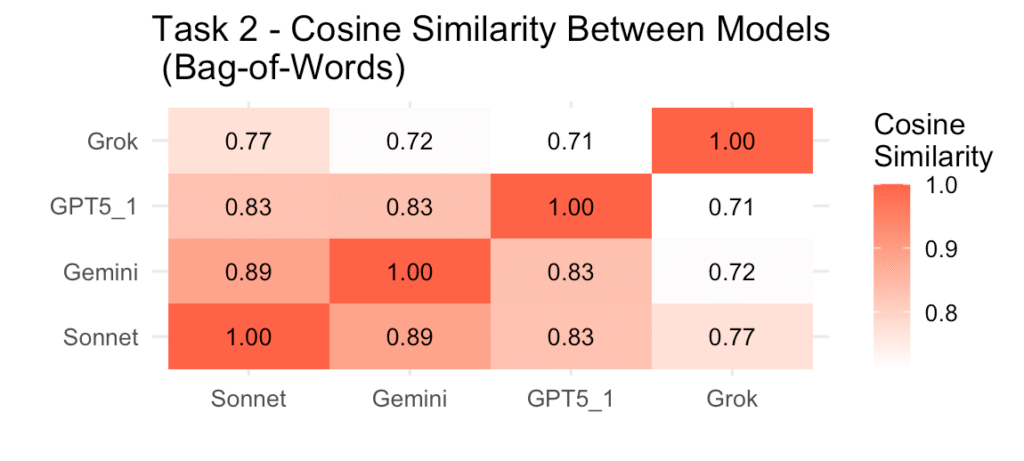

Text Similarity Analysis for Task 2

Similarity is calculated the same as Task 1, aka, I transformed all text in lowercase, stripped non-alphanumeric, tokenized it, created bag-of-words vectors and finally calculated the cosine similarity between models. These values are in the heatmap below, where higher values = more similar content.

| Pair | Similarity |

|---|---|

| GPT-5.1 ↔ Gemini | 0.83 |

| GPT-5.1 ↔ Claude | 0.83 |

| GPT-5.1 ↔ Grok | 0.71 |

| Gemini ↔ Claude | 0.89 |

| Gemini ↔ Grok | 0.72 |

| Claude ↔ Grok | 0.77 |

| Average | 0.79 |

Overall you can see that the average similarity across models is much better. This could be task-related, suggesting that depending from the topic, Gemini 3, Sonnet 4.5, GPT 5.1 and Grok 4.1 can converge or diverge in their responses. Overall:

- These values are much higher than Task 1 (where avg ≈ 0.586).

- Task 2 essays are highly similar across all models.

- Gemini & Claude show the highest similarity (0.89).

- GPT-5.1 is also very close to both Claude (0.83) and Gemini (0.83).

Task 3: Explain a GitHub repo

Here I tested the ability of the models to navigate to the Requests git repo (https://github.com/psf/requests) and tell me what’s there and what that library does. Basically I wanted a semi-technical explanation to see:

- whether the model’s mental model of an API is solid,

- whether it can describe technical behavior without hallucinating functions,

- how it organizes a concept for a developer,

- and how well it balances accuracy and clarity.

Heres the prompt:

Analyze the repository described above and summarize what the project does,

its main components, and typical usage patterns in 2–3 concise paragraphs

Formatting rules:

- Respond as plain text only.

- Do NOT use markdown, bullet lists, headings, or code fences.

- Do NOT mention these formatting rules.GPT-5.1 — Task3 Output

Requests is a widely used Python HTTP library that wraps lower-level modules with a clean, Pythonic API for sending HTTP/HTTPS requests. It abstracts complexities like connection handling, SSL verification, cookies, redirects, and encoding, providing a consistent interface for GET, POST, PUT, DELETE, and other HTTP verbs. The project is organized around the core request/response flow, with modules for sessions, authentication, exceptions, and utilities. Key components include the main api interface in requests/api.py, session management via Session objects for connection pooling and persistent settings, and adapters and hooks that plug into the underlying urllib3 transport. Additional modules handle authentication helpers, cookie management, content decoding, and compatibility layers. Typical usage involves importing requests, calling functions such as requests.get or requests.post with URLs, parameters, headers, data, or JSON payloads, and then inspecting the Response object for status, headers, and content. More advanced patterns rely on Session objects to reuse TCP connections, maintain cookies, or set default headers, and they can integrate custom transport adapters for specialized protocols or retry behaviors. Error handling centers on requests.exceptions classes, and developers often exploit features like streaming downloads, file uploads via multipart/form-data, and authentication helpers for OAuth, basic, or digest schemes.

GPT-5.1 — Qualitative Analysis

GPT-5.1 writes like a senior engineer talking to a junior hire who already knows some Python but hasn’t touched networking yet.

Its structure is intentional and layered:

- Define what Requests is (a high-level wrapper)

- Say what problem it solves (hides protocol details)

- List core features (redirects, cookies, connection pooling, SSL)

- Describe central objects (Session, Response)

- Describe exceptions

- Close with extended capabilities (streaming, adapters, OAuth)

This is technically complete and architecturally sound…however, it does NOT tell me how many files are there and how many lines of code are in the repo.

Claude 4.5-Sonnet — Task3 Output

The Requests library is a widely-used Python HTTP client that simplifies making HTTP requests and handling responses. With over 53,000 stars on GitHub, it has become the de facto standard for HTTP operations in Python, offering an elegant and intuitive API that abstracts away the complexities of working with URLs, headers, authentication, and various HTTP methods. The library is designed to make common HTTP tasks straightforward while still providing advanced functionality for more complex scenarios. The main components of Requests include modules for handling sessions that persist parameters across requests, managing cookies and authentication, processing different types of request and response encodings, and dealing with redirects and timeouts. The codebase of approximately 27,000 lines across 122 files includes core functionality for constructing and sending HTTP requests using methods like GET, POST, PUT, DELETE, and PATCH, as well as utilities for handling SSL verification, proxy configuration, and streaming large responses. The library also provides robust error handling and exception classes to help developers manage network-related issues gracefully. Typical usage patterns involve importing the requests module and calling simple methods like requests.get() or requests.post() with a URL and optional parameters such as headers, data payloads, query parameters, or authentication credentials. Users can work with Session objects to maintain state across multiple requests, which is useful for scenarios requiring login persistence or cookie management. The library returns Response objects that provide easy access to status codes, headers, content in various formats including JSON, and other metadata, making it straightforward to integrate HTTP communication into Python applications ranging from simple scripts to complex web services.

Claude 4.5 — Qualitative Analysis

Claude gives a gentler, warmer explanation of the same material.

Where GPT-5.1 sounds like an API reference with personality, Claude sounds like a documentation writer who wants the reader to feel comfortable.

Claude does mention the 122 files and also mentioend 27k lines of code. It stays accurate, avoids jargon when possible, highlights ease-of-use and readability

The explanation is slightly more surface-level compared to GPT-5.1 — it lists the right features but doesn’t dive into internal architecture (adapters, streaming, connection pools). Claude frames the library primarily as a productivity tool, not as a network abstraction layer. This is the explanation you’d expect from someone onboarding a student.

Gemini 3.0 — Task3 Output

Requests is a widely adopted Python HTTP library designed to simplify sending and handling web requests. Instead of working with lower-level modules like urllib or http.client, Requests provides high-level functions—such as requests.get(), requests.post(), and requests.put()—that allow developers to interact with web resources using clear and concise syntax. The library handles many common tasks automatically, including URL encoding, session management, SSL certificate verification, and redirecRequests is a widely adopted Python HTTP library designed to simplify the process of sending HTTP/1.1 requests. It abstracts the complexities of making network calls behind a user-friendly API, handling underlying details such as connection pooling, keep-alive connections, SSL verification, and content encoding automatically. The project aims to make HTTP interactions intuitive for developers, allowing them to communicate with web services without manually managing query strings, form-encoding POST data, or handling low-level socket operations. The library's architecture centers on the Session object, which allows for the persistence of parameters, headers, and cookies across multiple requests, and the Response object, which provides convenient access to returned content, status codes, and metadata. Key components include a set of high-level interface functions that map directly to HTTP verbs, robust exception handling classes, and an adapter system that manages transport logic. It also features built-in support for various authentication schemes, including Basic and Digest, and automatic decompression of response data. Typical usage patterns involve importing the module and invoking methods like get, post, put, or delete to interact with a specific URL. Developers frequently pass Python dictionaries to these methods to handle query parameters, form data, or JSON payloads, relying on the library to serialize the inputs correctly. Upon receiving a response, users generally inspect the status code to verify success and utilize helper methods to decode the response body into text or parse it directly into JSON format for data processing.

Gemini 3.0 — Qualitative Analysis

Gemini continues its “cover everything” behavior.

It writes like a tutor who wants to impress you with sheer exhaustiveness, but it still misses reporting the number of files and the number of lines of code.

It restates the difference between low-level and high-level librarie, It lists features (URL encoding, SSL, sessions, redirects), It explains credential handling It includes .json(), streaming, file uploads. It adds usage scenarios (API calls, scraping, JSON parsing)

This is the longest explanation of the four.

It doesn’t go deeper than GPT-5.1, but it goes wider, trying to touch every subtopic a beginner might wonder about.

Grok 4.1 — Task3 Output

Requests is a popular Python library that simplifies making HTTP requests, offering an intuitive API for operations like GET, POST, PUT, DELETE, and more. It handles complexities such as URL encoding, redirects, authentication, cookies, sessions, and streaming, making it far more user-friendly than Python's built-in urllib. Maintained by the Python Packaging Authority (psf), the project spans about 122 files and 27,419 lines of code, with over 53,501 GitHub stars reflecting its widespread adoption in web scraping, API interactions, and automation.

Key components include the core requests module for primary functions, Session for connection pooling and state management, adapters interfacing with urllib3 for HTTP transport, and models like Request and Response for handling payloads and results. Supporting modules cover authentication (e.g., HTTPBasicAuth, OAuth), cookies via RequestsCookieJar, and utilities for proxies, timeouts, and JSON handling.

Typical usage starts with a simple import requests followed by calls like r = requests.get('https://api.github.com/users/octocat') to fetch data, accessing r.status_code, r.json(), or r.text. For repeated requests, a Session is common: s = requests.Session(); s.post('https://httpbin.org/post', data={'key': 'value'}). It supports advanced patterns like streaming large files with r.iter_content() or custom headers via headers={'Authorization': 'Bearer token'}.

Grok 4.1 — Qualitative Analysis

Grok’s Task 3 output is its most disciplined so far. As I mentioned before, it tends attempts to return the most precise answer, where some other models like GPT 5.1 did not return the number of files and lines of code.

It’s crisp, technical, and surprisingly practical. Grok 4.1 is the only one that reports the exact number of files AND lines of code, and it attempts to be very precise in his asnwer:

- It covers the core API

- It lists session behavior

- It mentions redirects, SSL, cookies

- It lists specific code patterns like

auth=(user, password) - It includes concrete header examples (

Bearer token)

Among the four, Grok is the most example-driven.

It writes like an engineer who has actually debugged real code and wants you to avoid common mistakes — not by lecturing, but by giving usable snippets as plain text. Less narrative, more hands-on.

Task 3 Qualitative Comparison Across Models

All four models understand the Requests library correctly. And thankfully, none hallucinate on nonexistent methods or features.

All four list the core functions (get, post, put) and concepts:

- Sessions

- Response object

- SSL

- Redirects

- Cookies

- Headers

- JSON decoding

They have different personalities, and they seem to approach a problem (in this case parsing and explaining a Git repo) based on their personality.

GPT 5.1 went straight to the point without over-engineering the answer. Good internal structure. Good balance of precision and coverage.Claude Sonnet 4.5 used cleaner phrasing, less sharpness. Maybe more accessible for beginners.

Gemini 3.0 tried to covers everything it could to “impress” the reader. Includes side concepts (file uploads, scraping). Feels like a long-form answer squeezed into a short form.

Grok 4.1 was the only one bahaving straight up like an “engineer for engineers”. No BS, here’s the data. Less abstract, more actionable. Feels like reading a StackOverflow answer that knows your pain…but without people reminding you how dumb you are for asking that question for the 100000th time. It’s funny how Grok’s answer reminds me of Elon Musk lol!!

The Requests library explanation forces each model to explain an API instead of a historical topic. GPT-5.1 treats the task like a quick walkthrough of the essentials. It explains the library as a tool, names the core parts, and ends without padding anything. Claude feels patient and makes the explanation friendlier. It guides the reader through each section without pushing too much detail. Gemini again stretches the explanation. It covers more scenarios and mentions more features than the others. Grok keeps the technical side grounded and adds practical examples that feel closer to real code.

The topic doesn’t require creativity, but the way each model handles the explanation shows how it thinks about technical readers.

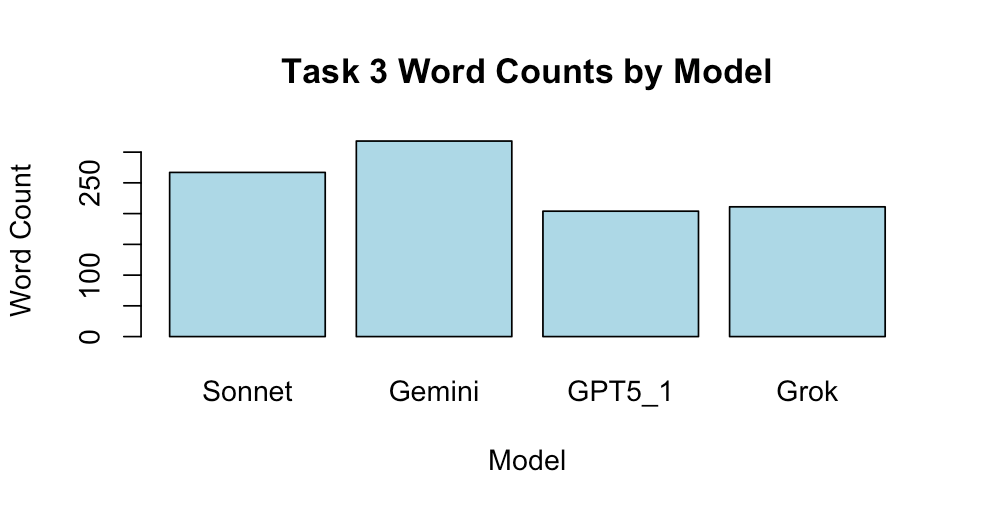

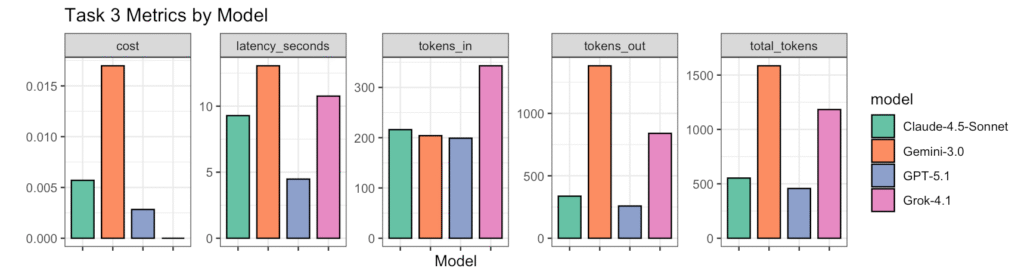

Task 3 Quantitative Analysis (tokens, cost, latency)

Simplest thigns first, how many words did GPT 5.1, Sonnet 4.5, Gemini 3.0 and Grok 4.1 use?

And from there we can look at tokens costs etc…just like we did before.

| Model | tokens_in | tokens_out | total_tokens | cost (USD) | latency (s) |

|---|---|---|---|---|---|

| GPT-5.1 | 199 | 258 | 457 | 0.002829 | 4.48 |

| Claude 4.5 | 216 | 337 | 553 | 0.005703 | 9.28 |

| Gemini 3.0 | 204 | 1381 | 1585 | 0.016980 | 13.05 |

| Grok 4.1 | 343 | 840 | 1183 | 0.000000 | 10.76 |

The tokens-out do not directly correlate with how much they wrote given different tokenizers, but overall:

- GPT-5.1: 258 tokens → shortest Task-3 explanation

- Claude 4.5: 337 tokens → slightly longer than GPT

- Grok 4.1: 840 tokens → over 3× GPT-5.1

- Gemini 3.0: 1381 tokens → by far the longest

This explains why Gemini feels the most verbose in Task 3: nearly 1.4k tokens for a short API explanation., And costs-wise:

- GPT-5.1: $0.00283

- Claude: $0.00570

- Gemini: $0.01698

- Grok: $0.00000 (again, this is a promotion from OpenRouter)

Latency mirrors verbosity…no brainer… the longer the output, the longer the wait.

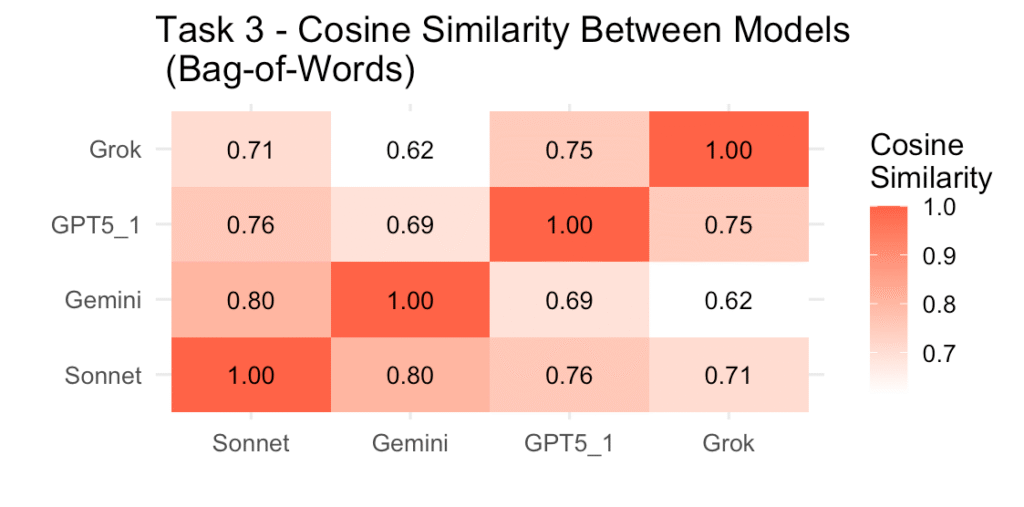

Text Similarity for Task 3

The heatmap summarizing the similarity across output is here:

| Pair | Similarity |

|---|---|

| GPT-5.1 ↔ Gemini | 0.69 |

| GPT-5.1 ↔ Claude | 0.76 |

| GPT-5.1 ↔ Grok | 0.75 |

| Gemini ↔ Claude | 0.80 |

| Gemini ↔ Grok | 0.62 |

| Claude ↔ Grok | 0.71 |

| Average (off-diagonal) | 0.72 |

Interestingly the average similarity = 0.72, which is higher than Task 1, but lower than Task 2. Task 3 explanations are: more similar than the 200-word essays of Task 1, but yet less similar than the historical explanations of Task 2.

Why Task 3 is more aligned than Task 1 (my personal take):

Technical writing is more constrained — each model talks about the same API objects, the same methods, the same features.

Why Task 3 is less aligned than Task 2:

Humanities explanations (Task 2) are extremely formulaic across LLMs. Technical explanations allow more “stylistic freedom” — some go broad (Gemini), some go deep (GPT-5.1), some go practical (Grok).

Which pairs are closest? Well, GPT-5.1 ↔ Claude (0.76) are second in line, but the most similar are Gemini ↔ Claude (0.80).

Instead, which pair is least similar? Gemini ↔ Grok (0.62)

Gemini tends to list more features and examples; Grok is more concise and practical → different lexicon → lower cosine similarity.

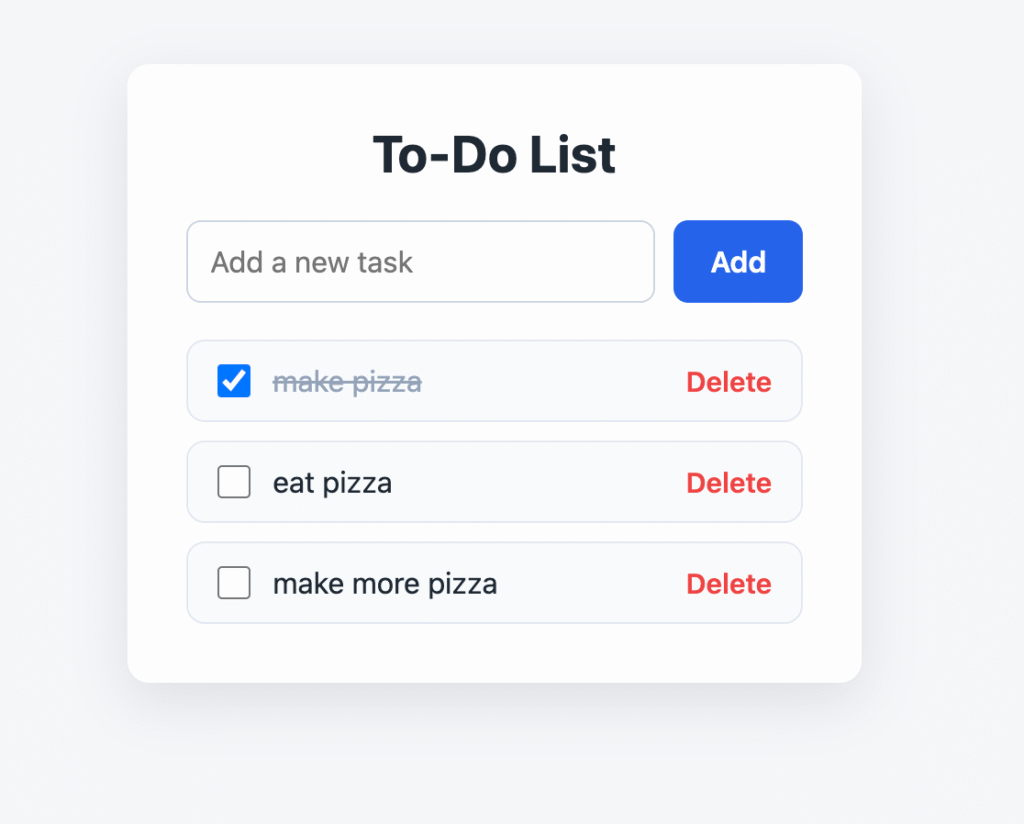

Task 4: Build a complete mini app for me

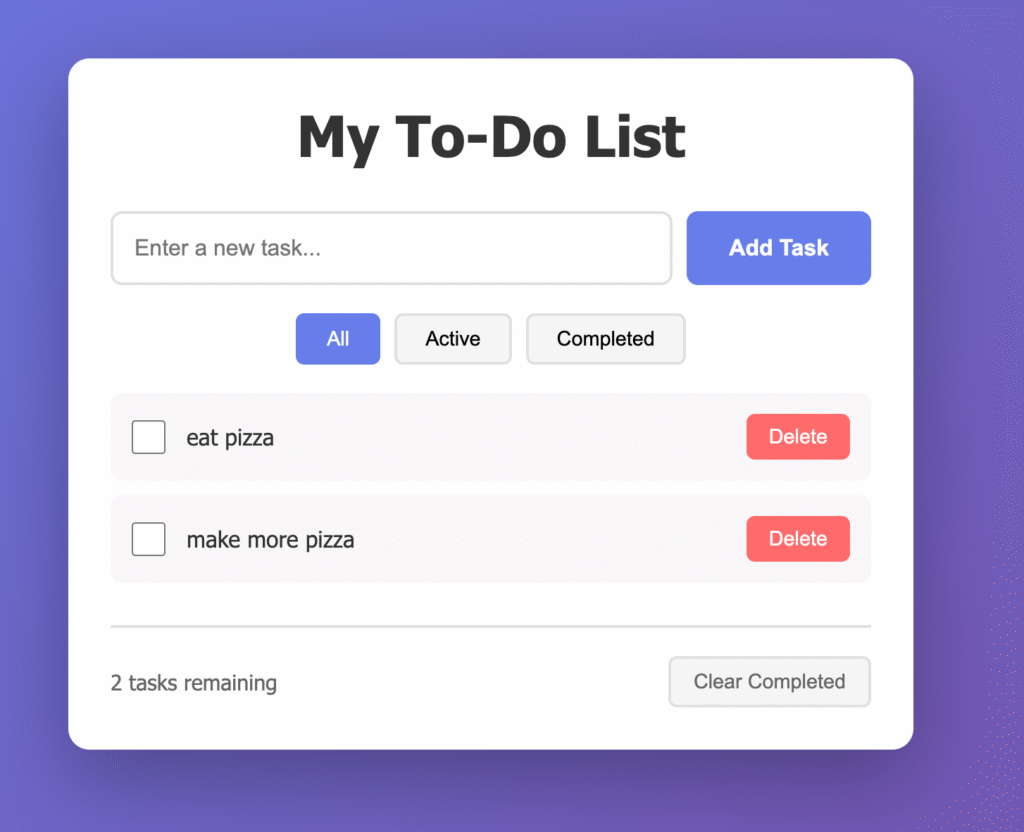

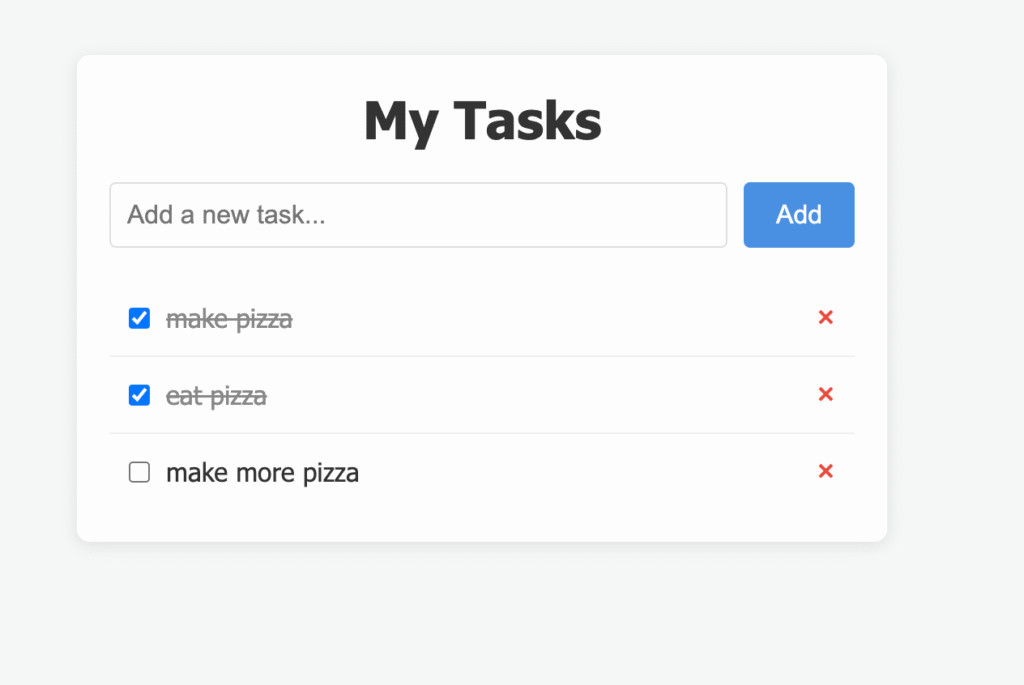

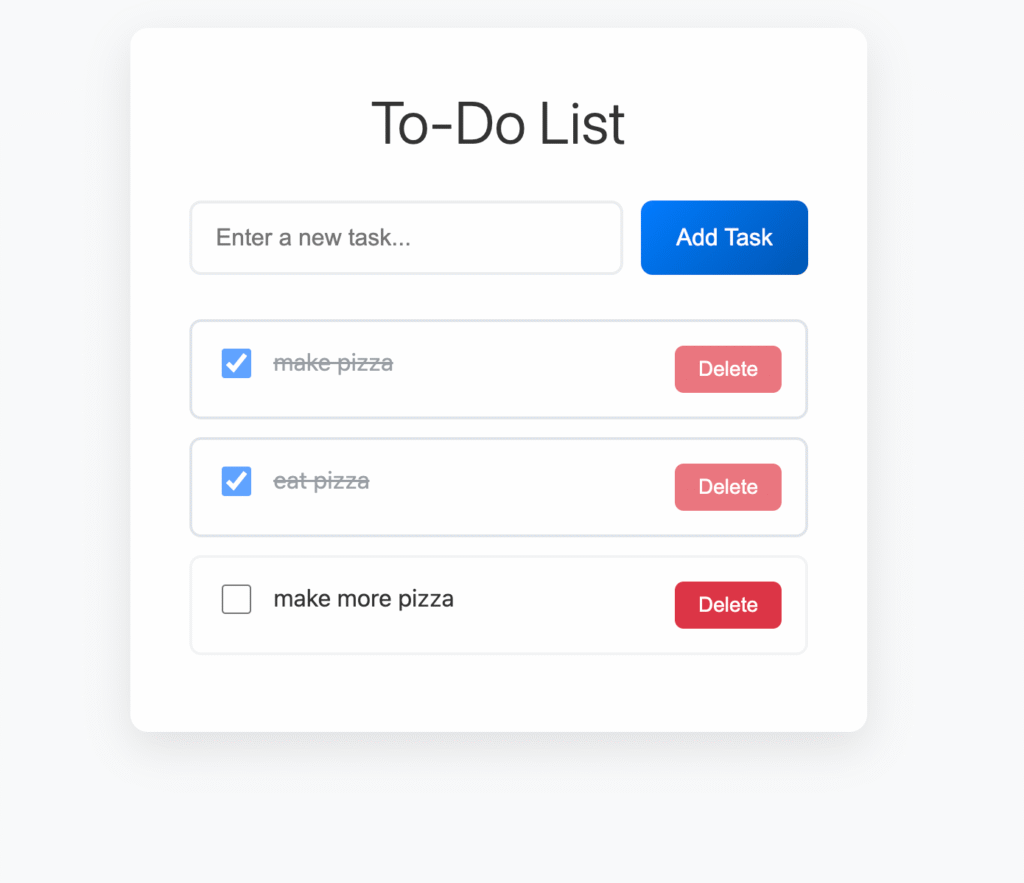

Gemini 3.0, GPT 5.1, Sonnet 4.5 and Grok 4.1 are great to brainstorm, have fun, learn something new etc…but a major application is in agentic coding. Here’s where I tested their ability to build a mini “to-do” app that I can run in my browser. Here’s the prompt:

Write a complete, functional To-Do List web application using HTML, CSS,

and JavaScript with the following features: add tasks, delete tasks, mark

tasks complete, and persist tasks locally (e.g., localStorage).

Code must include clear modular functions and be production-ready.

OUTPUT FORMAT (STRICT):

You MUST respond with a single JSON object with EXACTLY these keys:

- \index.html\

- \style.css\

- \app.js\

Each value MUST be a string containing the full file contents.

ABSOLUTE RULES (MUST FOLLOW):

- The entire response MUST be valid JSON.

- Do NOT wrap the JSON in markdown code fences.

- Do NOT add any keys besides \index.html\, \style.css\, \app.js\.

- Do NOT include comments, explanations, or text outside the JSON.

- Do NOT include trailing commas.Each model produces a three-file code project: index.html, style.css, and app.js.

GPT-5.1 — Qualitative Code Analysis

HTML (index.html)

GPT-5.1 produces tight, minimal, deliberate HTML.

The structure is predictable and professional:

- A clear

<main>container - A top section for inputs

- A middle or bottom section where results will be inserted

- Logical, semantic tags (

<section>,<div>,<button>) - Zero fluff, zero novelty for its own sake

It’s the kind of HTML that looks like something a mid-level frontend engineer would write on a weekday at 3 PM: clean, direct, and free of philosophical experiments.

GPT-5.1 makes no attempt to be “artistic.”

It’s here to get the job done.

CSS (style.css)

GPT-5.1’s CSS tends to be:

- Short

- Practical

- Slightly under-styled

- Focused on layout, not aesthetics

It uses flex or grid sanely, but never goes overboard. Colors are neutral. Spacing is modest. Nothing feels bloated. It avoids unnecessary resets or animations.

JS (app.js)

Technical personality: controlled and structured.

- Defines top-level functions with clear names (

runBenchmark(),renderResult(), etc.) - Avoids global leaks

- Uses

async/awaitin a clean straightforward way - Keeps DOM selection concise (

document.getElementById) - Keeps logic readable and short

GPT-5.1’s code reads like someone purposefully avoiding anything clever.

It’s reliable and easy to modify.

GPT-5.1 Overall is balanced, predictable, clean, easy to maintain. No surprises, no chaos, no brilliance, just simple coding.

Claude 4.5-Sonnet — Qualitative Code Analysis

HTML (index.html)

Claude writes HTML like someone who has read too much MDN documentation.

Not in a bad way — in a careful way.

Claude:

- Uses semantic tags aggressively (

<header>,<main>,<footer>) - Keeps indentation immaculate

- Organizes sections in a way that feels “designed for teaching”

- Comments sparingly but purposefully

Claude’s HTML feels conscientious — you can feel it trying to produce something aligned with modern accessibility and semantic correctness.

CSS (style.css)

Claude’s CSS tends to be smoother than GPT-5.1’s.

It feels a bit more like something a UI-focused engineer would write:

- Consistent spacing scale

- Predictable typography

- Stable color palette

- Clean layout decisions

- Prefers clarity over brevity

Claude reads like it wants the app to feel presentable, not just functional.

JS (app.js)

Claude’s JavaScript is careful, polite, and structured:

- More comments than GPT-5.1

- A slightly more didactic tone

- Strong preference for breaking logic into helper functions

- Defensive checks (

if (!data) return) - Clear separation between “data fetching” and “UI updating”

Claude behaves like a senior engineer mentoring a junior developer by example.

The file is readable in a very “team-friendly” way.

Claude’s code as overall feeling is not that different from GPT 5.1’s code, it feels clean, structured, albeit slightly more polished than GPT-5.1. And it added a purple-ish background maybe in the attempt to add some color-vibe.

Gemini 3.0 — Qualitative Code Analysis

Gemini behaves like someone who tries to show that they know every possible pattern. This results in code that is sometimes impressive, sometimes over-engineered…and if you used Gemini CLI, sometimes is a straight pain in the butt. Anyhow…

HTML (index.html)

Gemini produces the most expansive HTML:

- More sections

- More containers

- More IDs and classes

- More explanatory comments

It often adds extra wrappers, possibly to future-proof layout. It’s clean, but you can tell Gemini wasn’t trying to be minimalist.

CSS (style.css)

Gemini writes CSS like a developer who wants to be scalable:

- Lots of reusable classes

- CSS variables sometimes

- More spacing utilities

- More detailed styling

The result is not messy — just big. If Claude is “well-organized,” Gemini is “comprehensive.”

JS (app.js)

This is where Gemini shows its personality:

- More verbose function names

- More comments than anyone else

- More steps broken out than strictly necessary

- More “scaffolding” describing what could be added later

- Sometimes adds features nobody asked for

Gemini’s JS feels like a coding bootcamp instructor who wants the student to understand every single step.

Gemini 3.0 Overall

Gemini produces the largest codebase by a wide margin:

✔ Most comments

✔ Most modularization

✔ Most wrappers

✔ Most “explanatory overhead”

It’s extremely readable and extremely long.

Simple things take more lines than needed.

Gemini is the documentation-heavy developer.

Grok 4.1 — Qualitative Code Analysis

HTML (index.html)

Grok’s HTML is straightforward, but with a sharper, slightly more utilitarian tone:

- Direct DOM structure

- Crisp naming

- Less concern for semantics than Claude

- Less concern for minimalism than GPT-5.1

- Less concern for comprehensiveness than Gemini

It feels like a developer who wants to move fast and doesn’t care about impressing anyone.

CSS (style.css)

Grok’s CSS is the most “old-school” of the group:

- Lots of straightforward rules

- Less abstraction

- Practical but less elegant than Claude or Gemini

- Sometimes uses older patterns like

floator rigid margins (depending on the model’s tendencies)

It looks like code from someone who learned CSS 8 years ago and stuck to what works.

JS (app.js)

Grok’s JavaScript is the most aggressive and hands-on:

- More explicit DOM manipulation

- Uses simple imperative patterns

- Leans on direct examples rather than abstraction

- Clear, fast, and slightly blunt

- Sometimes the only model that writes specific auth header examples

Grok 4.1 code feels like all of his other responses in this test: straight to the point, and more like an “engineer on a deadline” than “engineer writing for a team”

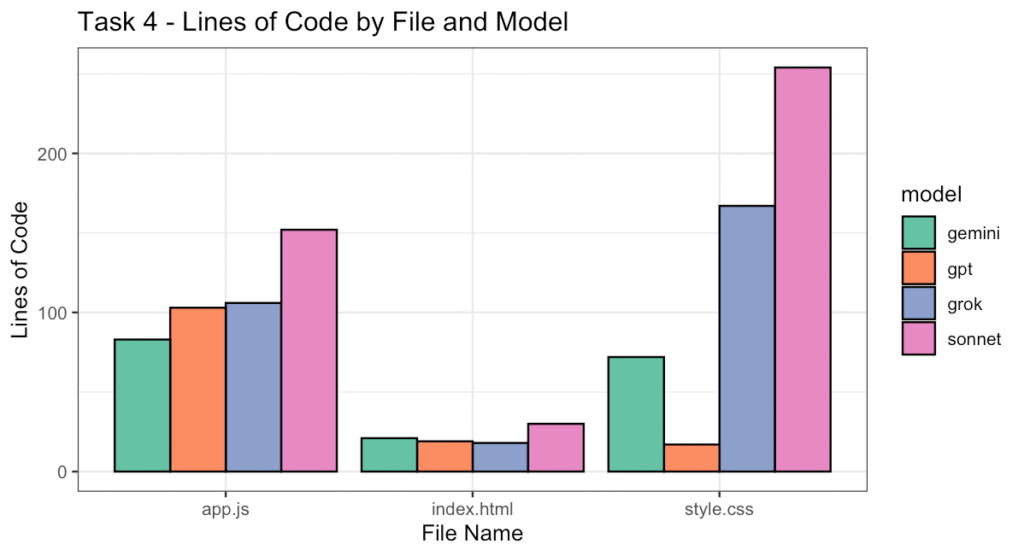

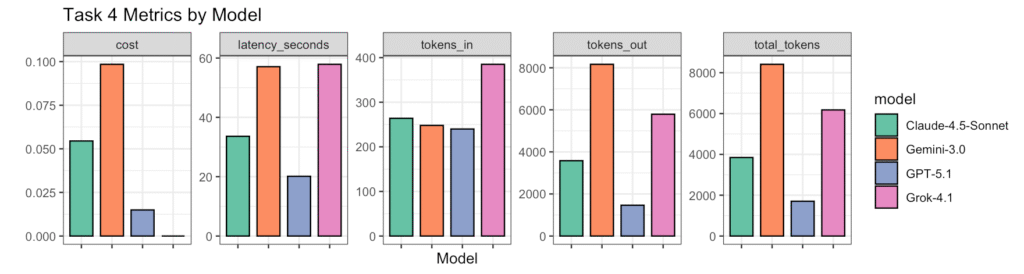

Task 4 Lines of Code, Token Usage, Cost, Latency

While visually the outputs are not that different, and their functionalities are also very very very similar, the number of lines of code per file differs greatly.

And overall, Sonnet 4.5 returned the longest answers as lines of code, with a behemoth 436 lines of code :

| File Name | Gemini 3.0 | GPT 5.1 | Grok 4.1 | Sonnet 4.5 |

| app.js | 83 | 103 | 106 | 152 |

| index.html | 21 | 19 | 18 | 30 |

| style.css | 72 | 17 | 167 | 254 |

| TOTAL | 176 | 139 | 291 | 436 |

When we look at tokens usage and costs, they do not strictly mirror the numbers above due to different tokenizers.

| Model | tokens_in | tokens_out | total_tokens | cost (USD) | latency (s) |

|---|---|---|---|---|---|

| GPT-5.1 | 240 | 1,465 | 1,705 | 0.014950 | 20.14 |

| Claude 4.5 | 264 | 3,581 | 3,845 | 0.054507 | 33.62 |

| Gemini 3.0 | 248 | 8,164 | 8,412 | 0.098464 | 57.08 |

| Grok 4.1 | 385 | 5,792 | 6,177 | 0.000000 | 57.88 |

Here Gemini 3.0 and Grok 4.1 take the leadership with the total number of tokens used, and if Grok 4.1 wasn’t free I assume that the costs would reflect that. The current costs are:

- GPT-5.1 → $0.01495

- Claude 4.5 → $0.05451

- Gemini 3.0 → $0.09846

- Grok 4.1 → $0.00000

With Gemini and Claude being 6.6× and 3.6 more expensive than GPT-5.1, respectively This matches the token usage almost perfectly.

In terms of latency, this also reflects costs and token usage, with GPT-5.1 being the fastest, probably because it writes the least code, Claude is slower but reasonable, but Gemini and Grok take almost a full minute. This was a very limited coding test, but I would expect the corresponding CLIs to reflect this too, directly impacting UX for agentic coding.

The code task is where the models separate the most. GPT-5.1 writes the smallest project. It builds a simple layout, keeps the styling modest, and structures the JavaScript in a clear and predictable way. The result looks like something designed to be easy to maintain. Claude produces code that feels a little more careful. The structure is slightly more polished, the CSS more organized, and the JavaScript more deliberate. Gemini delivers the largest and most commented codebase. It adds wrappers, clarifies steps that don’t need clarification, and creates more layers. Grok works faster and leaves more of its reasoning exposed in the code. It writes functional but less polished files that still get the job done.

Conclusions

The benchmark shows how each model handles (simplified) real work when you give them the same tasks and the same constraints. They all follow the instructions, but they don’t behave the same way. GPT-5.1 keeps the writing tight and stays close to the prompt. Claude slows the pace a bit and shapes the explanation so it reads smoothly. Gemini expands every idea and adds extra angles even when the prompt doesn’t ask for them. Grok moves fast, hits the main points, and doesn’t worry too much about polishing the answer. That pattern is definitely consistent, no matter what task you give them.

You can see the differences clearly in the essays. GPT-5.1 writes the kind of piece you expect from someone who knows the material and doesn’t feel the need to decorate it. Claude sounds like it has an ear for rhythm and aims for a cleaner read without stretching the content. Gemini pushes for density. It packs the same topic with more detail, more examples, more context. Grok sounds more direct and confident, almost like someone (ELon Musk…?) summarizing the same chapter while the others are still outlining. None of these models drift away from the facts, but they frame those facts with different instincts.

Once you align these observations with the numbers, the patterns lock into place. GPT-5.1 writes shorter answers and shorter code, so it responds faster and costs less. Claude writes moderately longer answers and code, so it sits in the middle. Gemini writes far more than the others across every task, which pushes its cost and latency higher even when the final text looks similar in quality. Grok uses more input tokens because of its tokenizer and produces larger outputs, so it tends to land closer to Gemini in size even though its tone is sharper.

Despite their differences, all four models stay accurate across these tasks. They agree on historical facts, core concepts, and technical details. The differences appear in how they deliver the material, how much they write, how long they take, and how much the API call costs. The essays reveal how they handle structured writing. The technical task shows how they explain code. The coding task reveals how disciplined they are when building something that has to work. The full benchmark shows each model’s habits in a way that single examples can’t.

What Should You Use?

If you need short, fast, predictable output, GPT-5.1 behaves that way across everything. If you want smoother phrasing without a large jump in cost, Claude stays consistent. If you want the fullest possible answer and don’t care about size or latency, Gemini always expands the material. If you want direct phrasing and more concrete examples, Grok keeps that style even in code.

The differences aren’t cosmetic. They reflect the way each model organizes information and how aggressively it fills the space you give it. This benchmark doesn’t rank them. It shows you what kind of worker each one becomes when you put them on the same job. Once you understand that, choosing the right model stops being guesswork. It becomes a matter of matching behavior to the outcome you want.

If you fin this helpful let me know, of share it with friends 🙂

If you found any inaccuracies and errors, please let me know so that I can fix them asasp!

And find more cools stuff at letsjustdoai.com.