NVIDIA Blackwell NVL72 Gives Top MoE Models a 10x Speed Boost

NVIDIA’s benchmarks show the top 10 most intelligent open-source AI models—all built with mixture-of-experts (MoE) architecture—running 10x faster on the GB200 NVL72 rack-scale system than on the previous HGX H200. Models like Kimi K2 Thinking, DeepSeek-R1, and Mistral Large 3 lead the Artificial Analysis leaderboard, as detailed in NVIDIA’s blog post from December 1, 2025. Yahoo Finance covers Kimi gains.

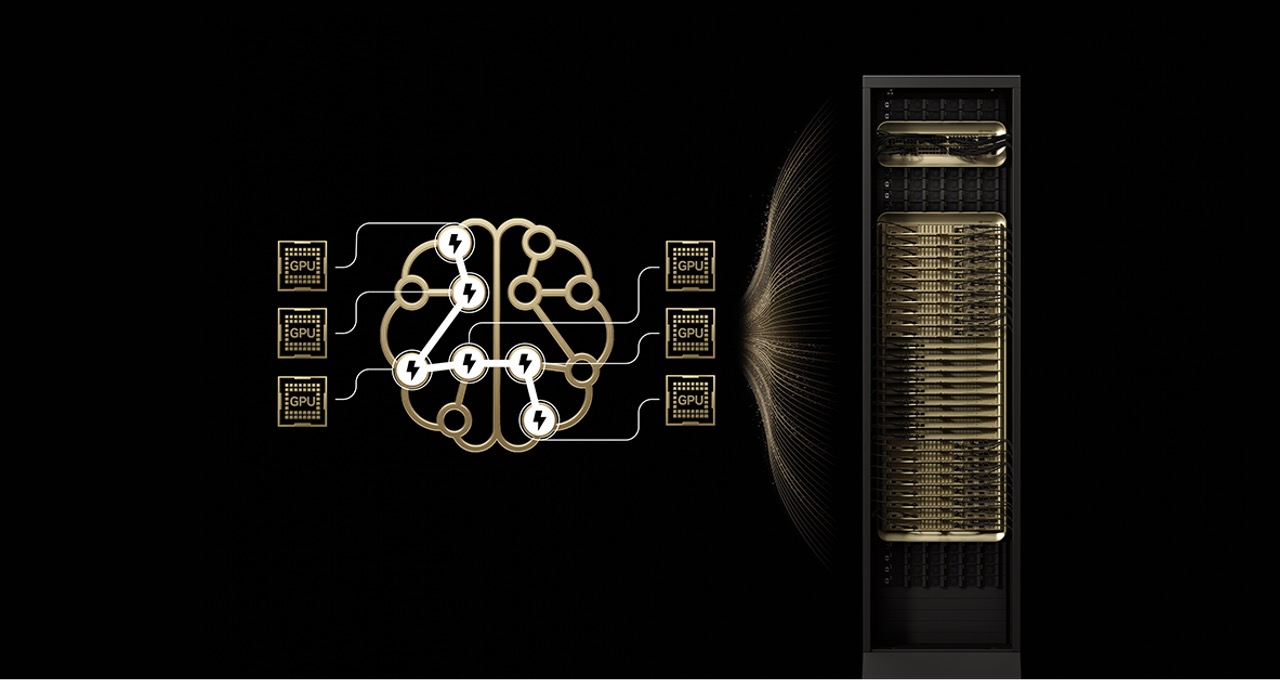

How MoE Works and Why It Matters

MoE models split work across specialized “experts,” much like the brain uses different areas for different tasks. A router picks only the needed experts for each token, so a model with hundreds of billions of parameters might use just tens of billions per token. This cuts compute needs while boosting smarts.

Over 60% of open-source AI releases this year use MoE, driving a 70x jump in model intelligence since early 2023, per NVIDIA. Guillaume Lample from Mistral AI notes their MoE work, starting with Mixtral 8x7B, makes advanced AI more accessible and less power-hungry.

The Benchmarks: Real 10x Gains

Kimi K2 Thinking, the top open-source model on Artificial Analysis, hits 10x better generation performance on GB200 NVL72 versus HGX H200. DeepSeek-R1 and Mistral Large 3 see the same leap. TechPowerUp reported this on December 2, alongside HotHardware and Wccftech, highlighting how NVIDIA’s design tackles MoE scaling pains. MarkTechPost details Mistral results.

These gains hold for inference, with 10x performance per watt. Jensen Huang pointed out at GTC that GB200 NVL72 delivers this for DeepSeek-R1 and variants.

What Enables the Speed on NVL72

GB200 NVL72 packs 72 Blackwell GPUs into one rack with 1.4 exaflops of AI performance and 30TB shared memory, linked by NVLink at 130 TB/s. It fixes MoE bottlenecks from H200 setups:

- Memory strain from loading experts. Fewer experts per GPU now.

- Slow all-to-all communication. NVLink handles it instantly across 72 GPUs.

Software like TensorRT-LLM, SGLang, and vLLM, plus Dynamo and NVFP4, boost this further. Cloud providers like CoreWeave and Together AI deploy it for production MoE workloads.

Real-World Impact

CoreWeave’s Peter Salanki says it brings MoE speed and reliability for agentic workflows. Fireworks AI’s Lin Qiao sees it transforming massive MoE serving. DeepL uses it to train efficient MoE models, per research lead Paul Busch. Together AI’s Vipul Ved Prakash credits full-stack tweaks for exceeding expectations on DeepSeek-V3.

This means lower costs per token, more users served, and better efficiency in power-limited data centers. MoE patterns extend to multimodal and agentic AI, sharing experts across tasks for even bigger scale.