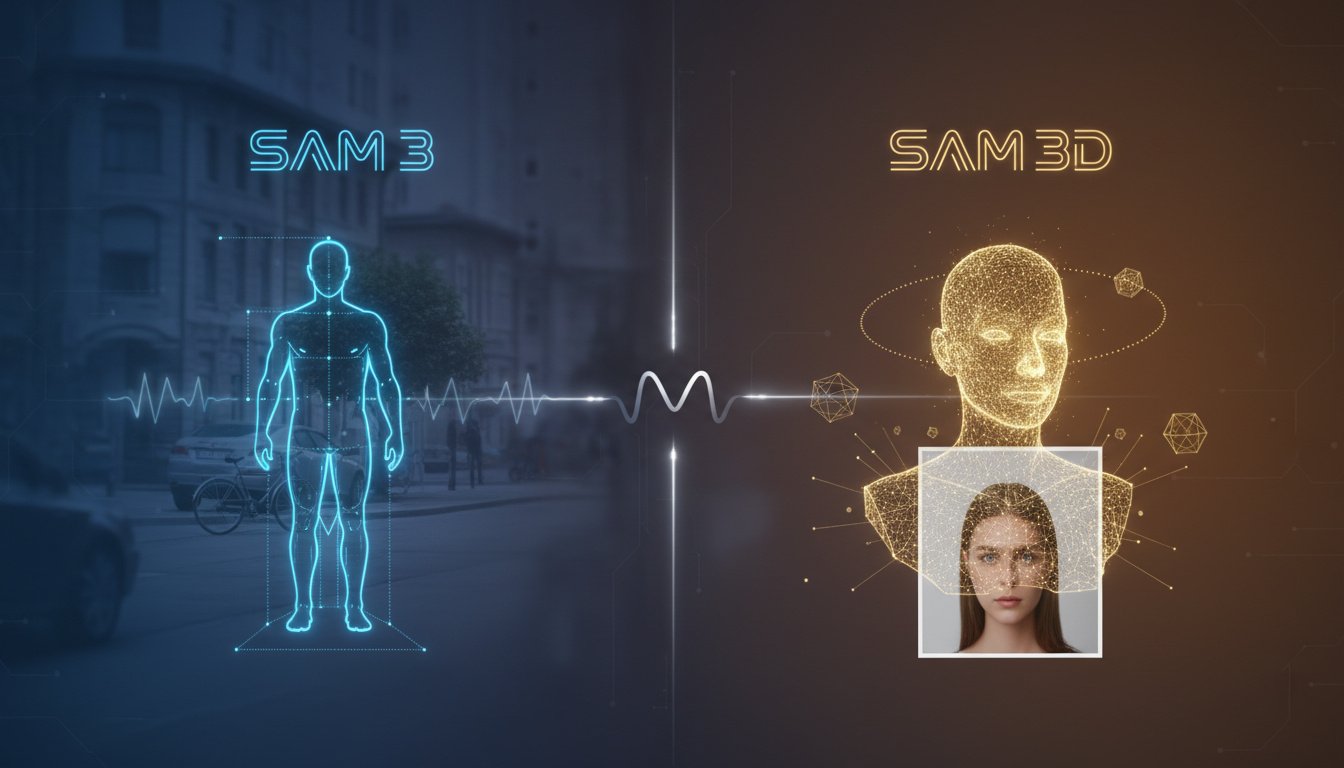

SAM 3 vs SAM 3D: Meta’s Push into Better Segmentation and 3D Reconstruction

Meta released SAM 3 and SAM 3D recently as open-source tools in the Segment Anything Model family. SAM 3 focuses on 2D segmentation and tracking in images and videos. SAM 3D extends that to pull 3D shapes and depth from single 2D photos. Both build on the original SAM from 2023, but they target different needs in computer vision.

SAM 3: Segmentation and Tracking with Text Prompts

SAM 3 improves on SAM 1 and SAM 2 by adding text prompts alongside visual ones. Tell it “red baseball cap” or “yellow school bus,” and it finds, segments, and tracks all matching objects across video frames, giving each a unique mask and ID. The Edge AI and Vision Alliance piece says it handles over 270,000 visual concepts, a big jump from earlier versions limited to clicks or boxes on single objects.

As detailed in the InfoQ article, SAM 3 has a new architecture for sharper boundaries, better handling of overlaps, small objects, and tough lighting or occlusions. It runs faster on GPUs, mobile hardware, PyTorch, ONNX, and web. Meta positions it for AR/VR, video editing, robotics, and data labeling, where it cuts down manual work by generating masks from plain language. A hands-on review at Analytics Vidhya praises it for image and video work.

SAM 3D: Lifting 2D Images to 3D Shapes

SAM 3D comes in two flavors: SAM 3D Objects for everyday things and SAM 3D Body for human shapes. Click any object in a photo—even small or hidden—and it rebuilds the full 3D model plus distance from the camera. The Caltech news on co-lead Georgia Gkioxari explains how they trained it despite scarce 3D data: a loop where AI proposes 3D shapes from 2D images, humans pick the best ones using common sense, then feed that back to improve the model.

Edge AI notes SAM 3D as companions to SAM 3, already in use for Facebook Marketplace 3D product views and robot manipulation demos. Roboflow covers setup for single-image 3D reconstruction with depth. UploadVR shows it pulling objects into VR fast. Caltech highlights apps in biology, gaming, retail, and security.

How They Compare

SAM 3 stays in 2D, outputting masks for images or video clips. SAM 3D outputs 3D meshes from one image. SAM 3 shines with text for multiple objects and motion; SAM 3D tackles the “lift to 3D” problem for robotics or AR, where machines need spatial understanding beyond flat photos.

- SAM 3 prompts: text, points, boxes; works on video.

- SAM 3D prompts: clicks on objects; single images to 3D.

- Training edge for SAM 3: bigger dataset for real-world scenes.

- Training edge for SAM 3D: human-AI data loop to scale 3D labels cheaply.

Together, they fit into pipelines (use SAM 3 to segment, then SAM 3D to reconstruct) for tasks like robot chores in cluttered rooms, as Gkioxari describes.